HarmonyOS Human-Computer Interaction Guidelines

In an all-scenario digital experience, an increasing variety of smart devices are distributed throughout users' daily lives, with interactive user interfaces widely present on smartphones, tablets, PCs, smart wearables, TVs, vehicle systems, virtual reality (VR), and augmented reality (AR) devices. Applications may run on multiple devices or be controlled by users through various input methods on a single device. This requires the user interface to automatically recognize and support different input methods, and enable users to interact with it in a familiar and comfortable way.

In the all-scenario human-computer interaction, the core philosophy of HarmonyOS is to "provide interactions that match the user's current state, ensuring consistency in user experience". For example, when an application runs on a touchscreen device, users can long-press with the finger to open the context menu; when running on a PC, users can right-click the mouse to open the menu. Typical input methods include but not limited to direct interactions such as fingers / stylus on touchscreens, indirect interactions such as a mouse / touchpad / keyboard / crown / remote control / vehicle joystick / knob / game controller / in-air gesture, as well as voice interaction.

When designing and developing applications, designers and developers should consider the possibility of user's using multiple input methods, ensuring that the application can respond correctly and in a user-friendly manner for the current input method. Our goal is to achieve "develop once, deploy on multiple devices" in the all-scenario experience, ultimately improving consistency for user experience.

Role

Project Leader. Define the human-computer interaction guidelines for various interaction and input methods for HarmonyOS; Lead the capability building at the levels of input subsystem and UI components of the OS; Promote optimization and adaptation among first-party and third-party apps to improve consistency in user experience.

Interaction Guidelines

Input Methods

First, we define the characteristics, functions, and interaction behaviors of various interaction modes and input devices. Then for each interaction task, we streamline the standard interaction rules when using different input devices. For specific contents, please see the official design guides.

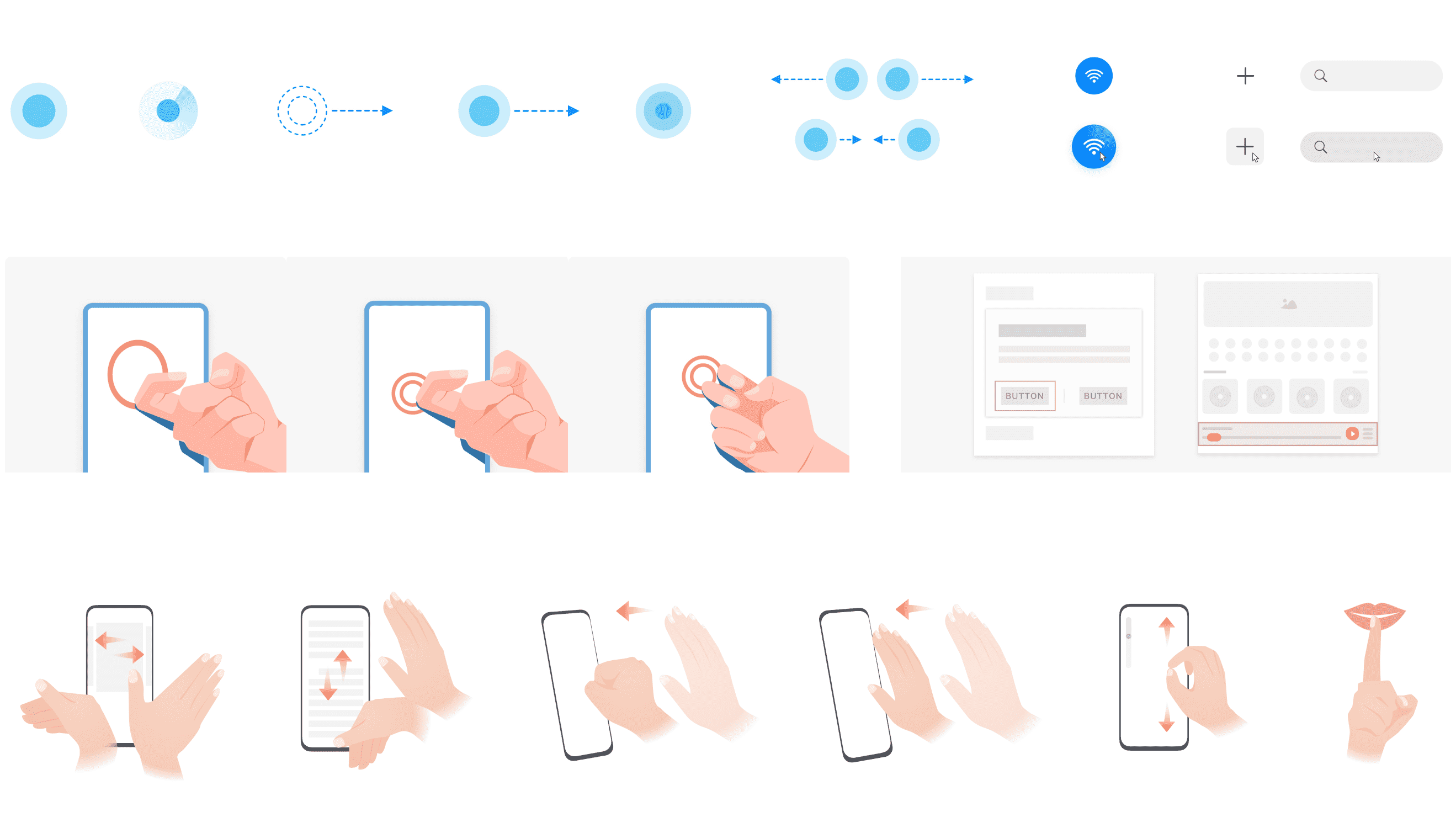

- Touch-based Interaction: Basic Gestures | Feature Gestures

- Cursor-based Interaction: Cursor Forms | Hover Object States | Additional Information Display | Precision Operations | Cursor Movement

- Focus-based Interaction: Basic Principles | Focus Navigation Order

- Mouse: Expected Behaviors | Relationship Between Mouse and Touch

- Touchpad: Expected Behaviors

- Keyboard: Focus Navigation | Basic and Standard Shortcuts | Custom Shortcuts

- Stylus: With Physical Buttons | Without Physical Buttons | Hand-drawing Kit

- In-air Gesture: Design Principles | Common Gestures

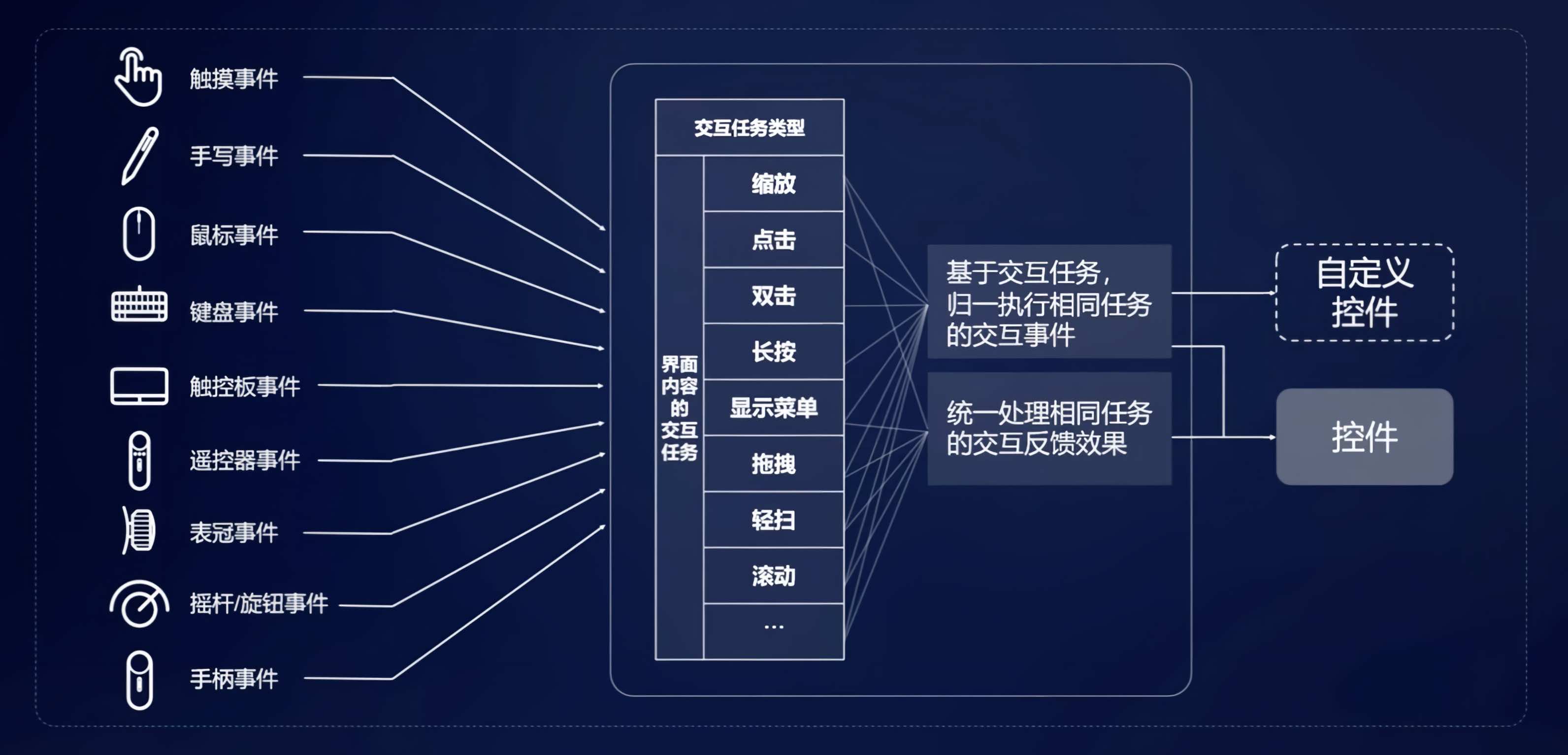

Unified Interaction Events

Based on the above interaction design standards, we propose the idea of "unification of interactions". We extract basic atomic interaction events and map various input methods onto these atomic events at OS level. The advantage is that when designers and developers create applications, they do not need to repeatedly adapt each function and interaction method, reducing work costs while improving consistency.

For example, for the atomic interaction event "zoom object," the rules defined for different input methods are:

| Input Method | Interaction Behavior |

|---|---|

| Touchscreen | Pinch out with two fingers on the screen to enlarge content, pinch in to reduce content. |

| Mouse |

Hold down the Ctrl key and scroll the mouse wheel to enlarge or reduce content based on the cursor position:

|

| Touchpad |

The pinch gesture with two fingers on the touchpad is consistent with the two-finger pinch gesture on the touchscreen.

When the cursor moves onto an object:

Optimize the control-display ratio to allow users to easily, quickly, and accurately adjust to the target size.

|

| Keyboard |

Ctrl + Plus Key: Enlarges the object N times centered on the object.

Ctrl + Minus Key: Reduces the object to 1/N times centered on the object.

|

We take actions like open / switch object, display menu, select object, slide object, zoom object, rotate object, drag object, refresh page, etc., as atomic interaction events. We outline and define the correct interaction rules for different input methods. For specific content, please see the official design guidelines.

Building the OS Input Subsystem

To implement the above design guidelines and the vision of "develop once, deploy on multiple devices," we have carried out work on two technical levels. One is the normalization mapping of many existing interaction events in the framework's input subsystem, which can solve most interaction experience problems. The other is the upgrade of the system's full range of components for normalized capabilities, which fundamentally supports unified interaction for newly implemented applications. These two aspects are also rooted in the native HarmonyOS as basic capabilities.

Adaptation and Application

Internally, I participated in the implementation of the above part in HarmonyOS and wrote development guides. Externally, the unified interaction framework as a feature capability of HarmonyOS has been continuously presented at the Huawei Developer Conference (HDC) and has also become one of the official courses.

In terms of applications, the unified interaction framework has supported all first-party applications, especially in multi-device collaboration scenarios, improving the user experience. At the same time, the unified interaction capability also makes the implementation of new scenarios and new input methods much easier, such as the innovative touchpad gesture interaction on the Huawei MateBook (shown below).